Introduction

Random Forests are an ensemble learning method (also thought of as a form of nearest neighbor predictor) for classification and regression that construct a number of decision trees at training time and outputting the class that is the mode of the classes output by individual trees (Random Forests is a trademark of Leo Breiman and Adele Cutler for an ensemble of decision trees).

Random Forests are a combination of tree predictors where each tree depends on the values of a random vector sampled independently with the same distribution for all trees in the forest. The basic principle is that a group of “weak learners” can come together to form a “strong learner”. Random Forests are a wonderful tool for making predictions considering they do not overfit because of the law of large numbers. Introducing the right kind of randomness makes them accurate classifiers and regressors.

Single decision trees often have high variance or high bias. Random Forests attempts to mitigate the problems of high variance and high bias by averaging to find a natural balance between the two extremes. Considering that Random Forests have few parameters to tune and can be used simply with default parameter settings, they are a simple tool to use without having a model or to produce a reasonable model fast and efficiently.

History

The general method of random decision forests was first proposed by Ho in 1995. Ho established that forests of trees splitting with oblique hyperplanes can gain accuracy as they grow without suffering from overtraining, as long as the forests are randomly restricted to be sensitive to only selected feature dimensions. A subsequent work along the same lines concluded that other splitting methods, as long as they are randomly forced to be insensitive to some feature dimensions, behave similarly. Note that this observation of a more complex classifier (a larger forest) getting more accurate nearly monotonically is in sharp contrast to the common belief that the complexity of a classifier can only grow to a certain level of accuracy before being hurt by overfitting. The explanation of the forest method's resistance to overtraining can be found in Kleinberg's theory of stochastic discrimination.

The early development of Breiman's notion of random forests was influenced by the work of Amit and Geman who introduced the idea of searching over a random subset of the available decisions when splitting a node, in the context of growing a single tree. The idea of random subspace selection from Ho was also influential in the design of random forests. In this method a forest of trees is grown, and variation among the trees is introduced by projecting the training data into a randomly chosen subspace before fitting each tree or each node. Finally, the idea of randomized node optimization, where the decision at each node is selected by a randomized procedure, rather than a deterministic optimization was first introduced by Dietterich.

The introduction of random forests proper was first made in a paper by Leo Breiman. This paper describes a method of building a forest of uncorrelated trees using a CART like procedure, combined with randomized node optimization and bagging. In addition, this paper combines several ingredients, some previously known and some novel, which form the basis of the modern practice of random forests, in particular:

Using out-of-bag error as an estimate of the generalization error.

Measuring variable importance through permutation.

The report also offers the first theoretical result for random forests in the form of a bound on the generalization error which depends on the strength of the trees in the forest and their correlation.

In this article, you are going to learn the most popular classification algorithm Which is the random forest algorithm.

figure courtesy - http://paolaelefante.com/2016/03/random-forest-part1

Suppose Mady somehow got 2 weeks leave from his office. She wants to spend his 2 weeks by travelling to the different place. She also wants to go to the place she may like.

So she decided to ask her best friend about the places she may like. Then her friend started asking about her past trips. It’s just like her best friend will ask, You have been visited the X place did you like it? Based on the answers which are given by Mady, her best friend starts recommending the place Mady may like. Here her best friend formed the decision tree with the answer given by Mady.

As her best friend may recommend his best place to Mady as a friend. The model will be biased with the closeness of their friendship. So she decided to ask a few more friends to recommend the best place she may like.

Now her friends asked some random questions and each one recommended one place to Mady. Now Mady considered the place which is high votes from her friends as the final place to visit.

In the above Mady trip planning, two main interesting algorithms decision tree algorithm and random forest algorithm used.

Decision Tree:

To recommend the best place to Mady, her best friend asked some questions. Based on the answers given by mady, he recommended a place. This is a decision tree algorithm approach as we have seen in previous post. Mady's friend used the answers given by Mady to create rules. Later he used the created rules to recommend the best place which he thought Mady will like. These rules could be, Mady likes a place with lots of tree or waterfalls ..etc In the above approach Mady's best friend is the decision tree. The vote (recommended place) is the leaf of the decision tree (Target class). The target is finalized by a single person, In a technical way of saying, using a single decision tree.

Random Forest Algorithm:

In the other case when Mady asked her other friends to recommend the best place to visit, each friend asked her different questions and come up with their recommendation for a place to visit. Later Mady consider all the recommendations and calculated the votes. Votes basically is to pick the popular place from the recommend places from all her friends. Mady will consider each recommended place and if the same place recommended by some other friend she will increase the count. At the end the highest count place is where Mady will go.

In this case, the recommended place (Target Prediction) is considered by many friends. Each friend is the tree and the combination of all friends will form the forest. This forest is the random forest, as each friend asked random questions to recommend the best place visit.

Now let’s use the above example to understand how the random forest algorithm work.

Applications

Random Forest Applications

The random algorithm used in wide varieties applications. In this article, we are going address few of them.

Below are some the application where random forest algorithm is widely used.

- Banking

- Medicine

- Stock Market

- E-commerce

Let’s begin with the banking sector.

1.Banking:

In the banking sector, random forest algorithm widely used in two main application. These are for finding the loyal customer and finding the fraud customers.

The loyal customer means not the customer who pays well, but also the customer whom can take the huge amount as loan and pays the loan interest properly to the bank. As the growth of the bank purely depends on the loyal customers. The bank customers data highly analyzed to find the pattern for the loyal customer based the customer details.

In the same way, there is need to identify the customer who are not profitable for the bank, like taking the loan and paying the loan interest properly or find the outlier customers. If the bank can identify theses kind of customer before giving the loan the customer. Bank will get a chance to not approve the loan to these kinds of customers. In this case, also random forest algorithm is used to identify the customers who are not profitable for the bank.

2.Medicine

In medicine field, random forest algorithm is used identify the correct combination of the components to validate the medicine. Random forest algorithm also helpful for identifying the disease by analyzing the patient’s medical records.

3.Stock Market

In the stock market, random forest algorithm used to identify the stock behavior as well as the expected loss or profit by purchasing the particular stock.

4.E-commerce

In e-commerce, the random forest used only in the small segment of the recommendation engine for identifying the likelihood of customer liking the recommend products base on the similar kinds of customers.

Running random forest algorithm on very large dataset requires high-end GPU systems. If you are not having any GPU system. You can always run the machine learning models in cloud hosted desktop. You can use clouddesktoponline platform to run high-end machine learning models from sitting any corner of the world.

The Model

Formally, a random forest is a predictor consisting of a collection of randomized base regression tree

{rn(x,θm,Dn)m≥1}, where Θ1, , Θ2 … are i.i.d. outputs of a randomizing variable Θ . These random trees are combined to form the aggregated regression estimate

where Eθ denotes expectation with respect to the random parameter, conditionally on X and the data set Dn. In practice, the above expectation is evaluated by Monte Carlo, i.e., by generating M (usually large) random trees, and taking the average of the individual outcomes The randomizing variable Θ is used to determine how the successive cuts are performed when building the individual trees, such as selection of the coordinate to split and position of the split.

The Algorithm

As the name suggests, a Random Forest is a tree-based ensemble with each tree depending on a collection of random variables. More formally, for a p-dimensional random vector X = (X1,…,Xp)T representing the real-valued input or predictor variables and a random variable Y representing the real-valued response, we assume an unknown joint distribution PXY(X,Y). The goal is to find a prediction function f (X) for predicting Y. The prediction function is determined by a loss function L(Y, f (X)) and defined to minimize the expected value of the loss

where the subscripts denote expectation with respect to the joint distribution of X and Y.

Intuitively, L(Y, f (X)) is a measure of how close f (X) is to Y; it penalizes values of f (X) that are a long way from Y. Typical choices of L are squared error loss L(Y, f (X)) = (Y - f(X) )2 for regression and zero-one loss for classification:

It turns out that minimizing EXY(L(Y, f (X))) for squared error loss gives the conditional expectation

f (x) = E(Y|X = x) (3)

otherwise known as the regression function. In the classification situation, if the set of possible values of Y is denoted by Y , minimizing EXY(L(Y, f(X)) for zero-one loss gives

otherwise known as the Bayes rule.

Ensembles construct f in terms of a collection of so-called “base learners” h1(x),

…, hJ(x)

and these base learners are combined to give the “ensemble predictor” f (x). In regression, the base learners are averaged

while in classification, f (x) is the most frequently predicted class (“voting”)

In Random

Forests the jth base learner is a tree denoted hj(X,θj),

where θj is a collection of

random variables and the θj’s are independent for j = 1,

. . . , J.

Definition

As mentioned earlier in this Section, a

Random Forest uses trees hj(X,Θj) as

base learners. For training data D

= {(x1, y1), . . . , (xN,

yN)}, where xi = (xi,1,

. . . , xi,p)T denotes the p predictors and yi

denotes the response, and a particular realization θj of Θj , the fitted tree

is denoted ĥ j(x, θj, D). While this

is the original formulation from Breiman, in practice the random

component θj is not considered explicitly but is implicitly used to inject

randomness in two ways. First, as with bagging, each tree is fit to an independent bootstrap

sample from the original data. The randomization involved in bootstrap sampling gives one

part of θj . Second, when splitting a node, the best split is found over a

randomly selected subset of m predictor variables instead of all p predictors,

independently at each node. The randomization used to sample the predictors gives the remaining

part of θj . The resulting trees are combined by unweighted voting if the response is categorical (classification) or unweighted averaging if the response is continuous (regression).

Random Forest Algorithm

Let D = {(x1, y1),

. . . , (xN, yN)} denote the training data,

with xi = (xi,1, . . . , xi,p)T

. For j = 1 to J:

1. Take a

bootstrap sample Dj

of size N from D.

2. Using the

bootstrap sample Dj as the training data, fit a tree using

binary recursive partitioning :

a. Start with all observations in a

single node.

b. Repeat the following steps

recursively for each unsplit node until the stopping criterion is met:

i. Select m predictors at

random from the p available predictors.

ii. Find the best binary split among

all binary splits on the m predictors from step i.

iii. Split the node into two

descendant nodes using the split from step ii.

To make a

prediction at a new point x,

ŷ = 1/J(Σĥj(x)) for regression

ŷ = argmaxyΣI(ĥj(x)

= y) for classification

where ĥj(x) is the prediction

of the response variable at x using the jth tree

Diagrammatically

Tuning

Although Random Forests have the reputation of working quite well right out of the box, there are three parameters that may be tuned to give improved accuracy for particular situations:

m, the number of randomly selected predictor variables chosen at each node

J, the number of trees in the forest

tree size, as measured by the smallest node size for splitting or the maximum

number of terminal nodes

The only one of these parameters to which Random Forests is somewhat sensitive appears to be m. In classification, the standard default is m = √M, where M is the total number of predictors. In regression, the default is m = N/3, where N is the sample size. If tuning is necessary, m can be chosen using the out-of-bag error rate, but then this no longer gives an unbiased estimate of generalization error. However, typically Random Forests are not very sensitive to m, so fine-tuning is not required and overfitting effects due to choice of m should be relatively small

Advantages of random forest algorithm

Below are the advantages of random forest algorithm compared with other classification algorithms.

- Accuracy

- Runs efficiently on large data bases

- Handles thousands of input variables without variable deletion

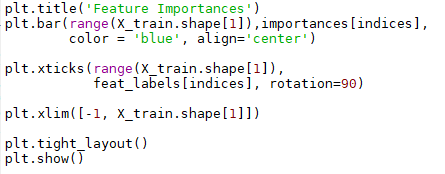

- Gives estimates of what variables are important in the classification

- Generates an internal unbiased estimate of the generalization error as the forest building progresses

- Provides effective methods for estimating missing data

- Maintains accuracy when a large proportion of the data are missing

- Provides methods for balancing error in class population unbalanced data sets

- Generated forests can be saved for future use on other data

- Prototypes are computed that give information about the relation between the variables and the classification.

- Computes proximities between pairs of cases that can be used in clustering, locating outliers, or (by scaling) give interesting views of the data

- Capabilities of the above can be extended to unlabeled data, leading to unsupervised clustering, data views and outlier detection

- Offers an experimental method for detecting variable interactions

- https://dataaspirant.com/2017/05/22/random-forest-algorithm-machine-learning

- https://www.datasciencecentral.com/profiles/blogs/random-forests-algorithm

- https://en.wikipedia.org/wiki/Random_forest#History

- Analysis of a Random Forests Model by G´erard Biau

- RANDOM FORESTS by Leo Breiman

- Random Forests by Adele Cutler, D. Richard Cutler and John R. Stevens