RANDOM FOREST CODE

What A decision tree is the building block of a random forest and by itself is a rather inbuilt model. You might be tempted to ask why not just use one decision tree? It seems like the perfect classifier since it did not make any mistakes! Following are the reasons,

1. We use random forest when data is not linearly separable.

2. It uses a bagging approach to create a bunch of decision tree with a random subset of the data.

3. The o/p of all the decision trees in the random forest is combined to make a final decision.

a. The final decision is made b polling results of each decision tree.

b. The final decision is made just going with a prediction that appears the most times in the decision tree.

4. It maintains accuracy when there is missing data and also resistant to outliers.

5. It saves data preparation time.

A critical point to remember is that the tree made no mistakes on the training data. We expect this to be the case since we gave the tree the answers. The point of a machine learning model is to generalize well to the testing data. Unfortunately, when we do not limit the depth of our decision tree, it tends to overfit to the training data. Overfitting occurs when our model has high variance and essentially memorizes the training data. This means it can do very well - even perfectly - on the training data, but then it will not be able to make accurate predictions on the test data because the test data is different! What we want is a model that learns the training data well, but then also can translate that to the testing data. The reason the decision tree is prone to overfitting when we don’t limit the maximum depth because it has unlimited complexity, meaning that it can keep growing until it has exactly one leaf node for every single observation, perfectly classifying all of them.

Random Forest Features

We can think of decision trees as a flowchart of questions asked about our data, eventually leading to a predicted class (or continuous value in the case of regression). we ask a series of questions about the data until we eventually have arrived at a decision. As follow,

1. State the question and determine the required data [1]

2. Set the data in an accessible format

3. Identify and correct missing data points as required

4. Prepare the data for the machine learning model

5. Train the model on the training data

6. Make predictions on the test data

7. Compare predictions to the known test set targets and calculate performance metrics

8. If performance is not satisfactory, adjust the model, acquire more data, or try a different modeling technique

9. Interpret model and report results visually and numerically

Example

We are going to learn random forest by taking decision tree example of balance weight we have already gone through the following example in decision tree http://fiascodata.blogspot.com/2018/06/. Once we got the decision tree let us see how to use random forest machine learning algorithm.

Step 1

Import all the necessary files for random forest classifier and balance data .csv file as,

Step 2

To check the feature importance select the features from index.

Step 3

Now add the random forest features

· n_jobs : integer, optional (default=1)

· The number of jobs to run in parallel for both fit and predict. If -1, then the number of jobs is set to the number of cores.

· random_state : int, RandomState instance or None, optional (default=None)

· If int, random_state is the seed used by the random number generator; If RandomState instance, random_state is the random number generator; If None, the random number generator is the RandomState instance used by np.random.

· The classes labels (single output problem), or a list of arrays of class labels (multi-output problem).

· n_classes_ : int or list, The number of classes (single output problem), or a list containing the number of classes for each output (multi-output problem).

· feature_importances_ : array of shape = [n_features]. The feature importances (the higher, the more important the feature).

Step 4

Now train and test the data. Print the feature important values as,

Step 5

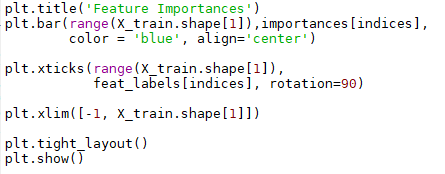

Now plot the feature importance graph for visualization,

Output

Conclusion

We first looked at an individual decision tree, the basic building block of a random forest, and then we saw how we can combine hundreds of decision trees in an ensemble model.

REFERENCE

2. https://enlight.nyc/projects/random-forest

No comments:

Post a Comment